16. ch 04. 감성분류 - 02. 한국어 텍스트 데이터 전처리 실습 - 17. ch 04. 감성분류 - 03. Logistic Regression을 이용한 긍부정 키워드

16. ch 04. 감성분류 - 02. 한국어 텍스트 데이터 전처리 실습

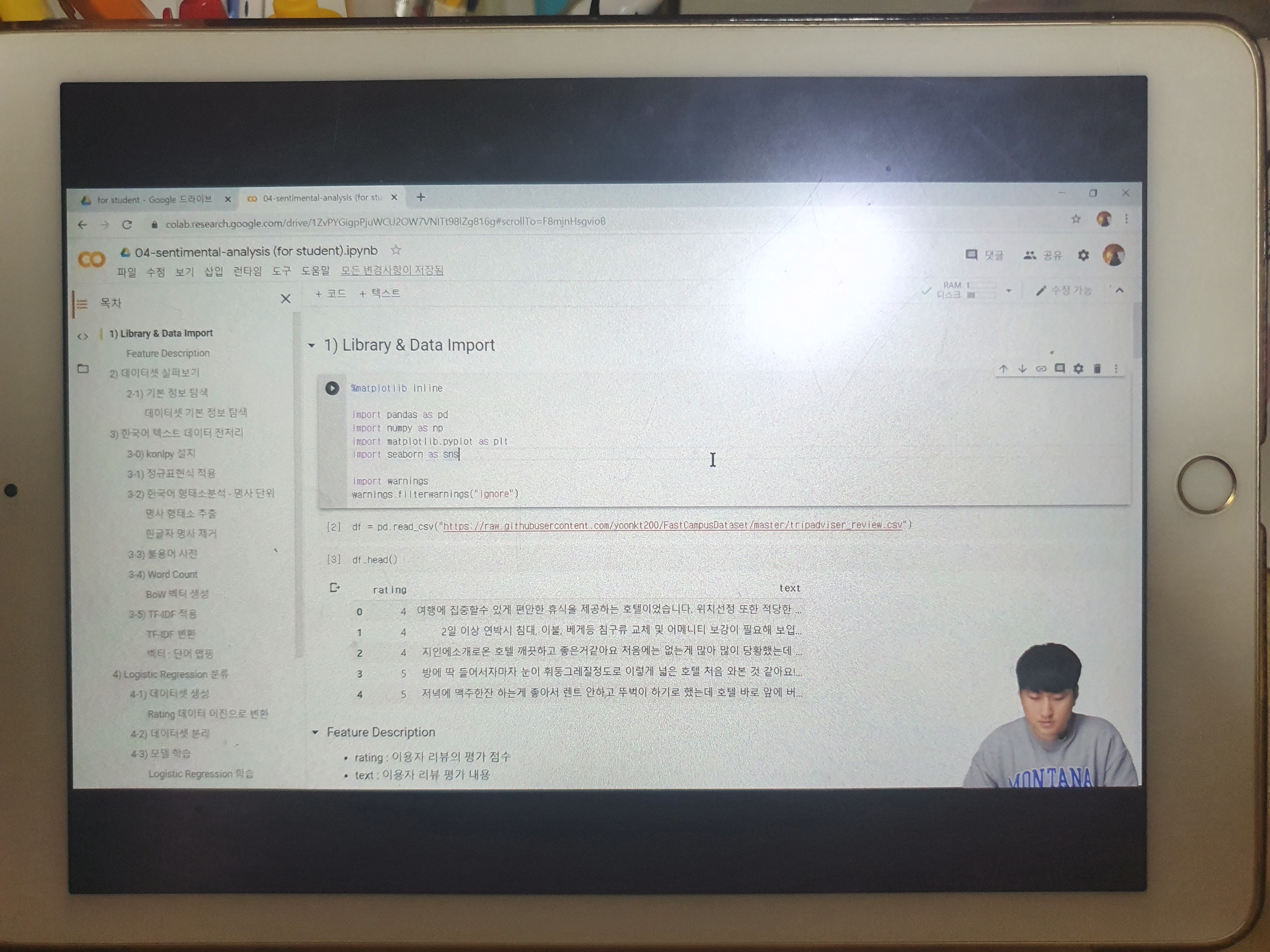

1) Library & Data Import

%matplotlib inline

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

import warnings

warnings.filterwarnings("ignore")

df = pd.read_csv("https://raw.githubusercontent.com/yoonkt200/FastCampusDataset/master/tripadviser_review.csv")

df.head()

2) 데이터셋 살펴보기

2-1) 기본 정보 탐색

데이터셋 기본 정보 탐색

df.shape

df.isnull().sum()

df.info()

df['text'][0]

len(df['text'].values.sum())

3) 한국어 텍스트 데이터 전처리

3-0) konlpy 설치

!pip install konlpy==0.5.1 jpype1 Jpype1-py3

3-1) 정규표현식 적용

import re

def apply_regular_expression(text):

hangul = re.compile('[^ ㄱ-ㅣ가-힣]') # 한글의 정규표현식

result = hangul.sub('', text)

return result

apply_regular_expression(df['text'][0])

3-2) 한국어 형태소분석 - 명사 단위

명사 형태소 추출

from konlpy.tag import Okt

from collections import Counter

nouns_tagger = Okt()

nouns = nouns_tagger.nouns(apply_regular_expression(df['text'][0]))

nouns

nouns = nouns_tagger.nouns(apply_regular_expression("".join(df['text'].tolist())))

counter = Counter(nouns)

counter.most_common(10)

한글자 명사 제거

available_counter = Counter({x : counter[x] for x in counter if len(x) > 1})

available_counter.most_common(10)

3-3) 불용어 사전

stopwords = pd.read_csv("https://raw.githubusercontent.com/yoonkt200/FastCampusDataset/master/korean_stopwords.txt").values.tolist()

print(stopwords[:10])

jeju_hotel_stopwords = ['제주', '제주도', '호텔', '리뷰', '숙소', '여행', '트립']

for word in jeju_hotel_stopwords:

stopwords.append(word)

3-4) Word Count

BoW 벡터 생성

from sklearn.feature_extraction.text import CountVectorizer

def text_cleaning(text):

hangul = re.compile('[^ ㄱ-ㅣ가-힣]')

result = hangul.sub('', text)

tagger = Okt()

nouns = nouns_tagger.nouns(result)

nouns = [x for x in nouns if len(x) > 1]

nouns = [x for x in nouns if x not in stopwords]

return nouns

vect = CountVectorizer(tokenizer = lambda x: text_cleaning(x))

bow_vect = vect.fit_transform(df['text'].tolist())

word_list = vect.get_feature_names()

count_list = bow_vect.toarray().sum(axis=0)

word_list

count_list

bow_vect.shape

bow_vect.toarray()

bow_vect.toarray().sum(axis=0)

bow_vect.toarray().sum(axis=0).shape

word_count_dict = dict(zip(word_list, count_list))

word_count_dict

3-5) TF-IDF 적용

TF-IDF 변환

from sklearn.feature_extraction.text import TfidfTransformer

tfidf_vectorizer = TfidfTransformer()

tf_idf_vect = tfidf_vectorizer.fit_transform(bow_vect)

print(tf_idf_vect.shape)

print(tf_idf_vect[0])

벡터 : 단어 맵핑

invert_index_vectorizer = {v: k for k, v in vect.vocabulary_.items()}

print(str(invert_index_vectorizer)[:100]+'..')

17. ch 04. 감성분류 - 03. Logistic Regression을 이용한 긍부정 키워드

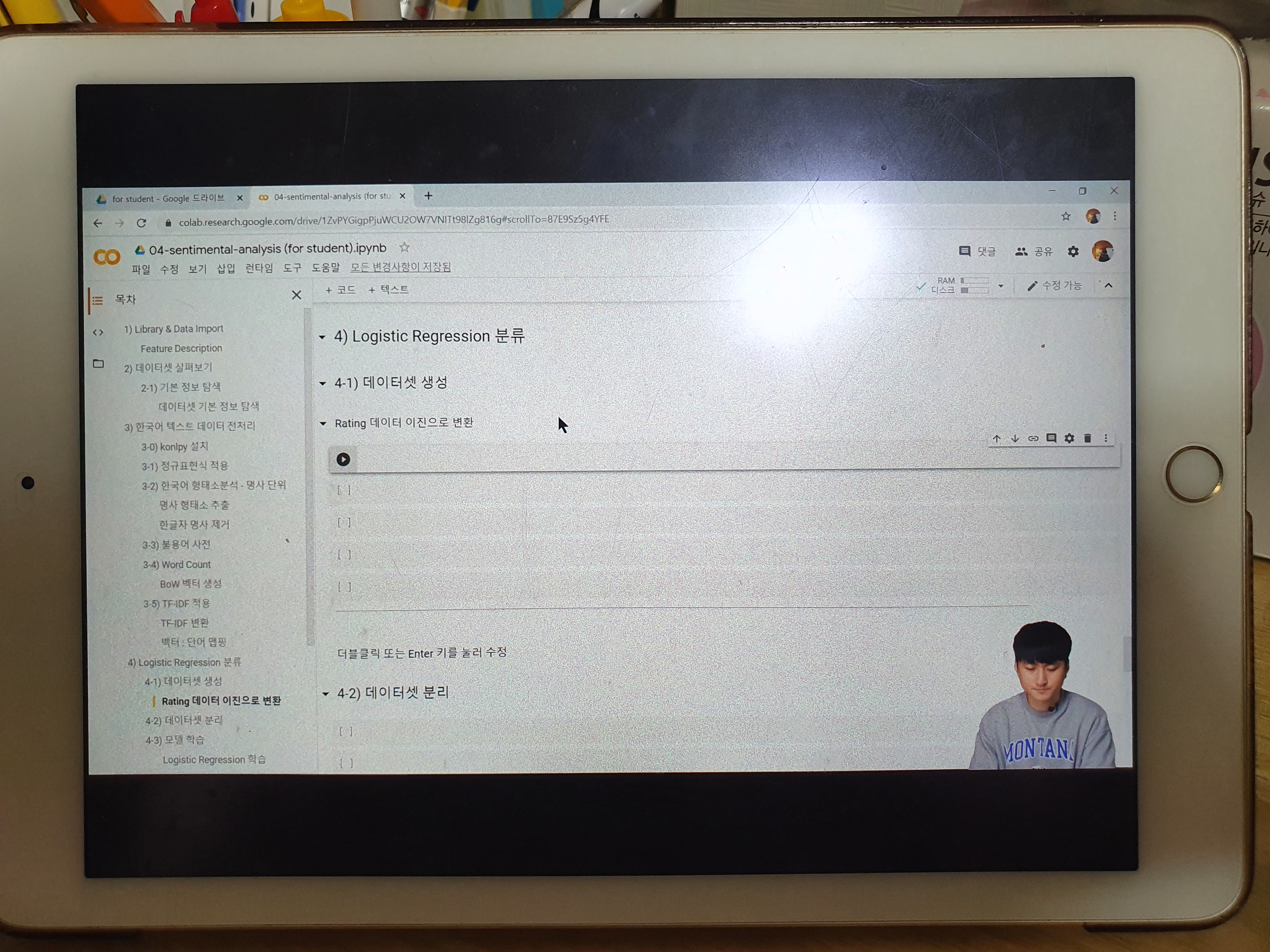

4) Logistic Regression 분류

4-1) 데이터셋 생성

Rating 데이터 이진으로 변환

df.head()

df.rating.hist()

def rating_to_label(rating):

if rating > 3:

return 1

else:

return 0

df['y'] = df['rating'].apply(lambda x: rating_to_label(x))

df.head()

df.y.value_counts()

4-2) 데이터셋 분리

from sklearn.model_selection import train_test_split

y = df['y']

x_train, x_test, y_train, y_test = train_test_split(tf_idf_vect, y, test_size=0.30)

print(x_train.shape)

print(x_test.shape)

4-3) 모델 학습

Logistic Regression 학습

from sklearn.linear_model import LogisticRegression

from sklearn.metrics import accuracy_score, precision_score, recall_score, f1_score

lr = LogisticRegression(random_state=0)

lr.fit(x_train, y_train)

y_pred = lr.predict(x_test)

분류 결과 평가

print("accuracy: %.2f" % accuracy_score(y_test, y_pred))

print("Precision : %.3f" % precision_score(y_test, y_pred))

print("Recall : %.3f" % recall_score(y_test, y_pred))

print("F1 : %.3f" % f1_score(y_test, y_pred))

from sklearn.metrics import confusion_matrix

confmat = confusion_matrix(y_true=y_test, y_pred=y_pred)

print(confmat)

4-4) 샘플링 재조정

1:1 Sampling

positive_random_idx = df[df['y']==1].sample(275, random_state=33).index.tolist()

negative_random_idx = df[df['y']==0].sample(275, random_state=33).index.tolist()

random_idx = positive_random_idx + negative_random_idx

X = tf_idf_vect[random_idx]

y = df['y'][random_idx]

x_train, x_test, y_train, y_test = train_test_split(X, y, test_size=0.25, random_state=33)

print(x_train.shape)

print(x_test.shape)

모델 재학습

lr = LogisticRegression(random_state=0)

lr.fit(x_train, y_train)

y_pred = lr.predict(x_test)

분류 결과 평가

print("accuracy: %.2f" % accuracy_score(y_test, y_pred))

print("Precision : %.3f" % precision_score(y_test, y_pred))

print("Recall : %.3f" % recall_score(y_test, y_pred))

print("F1 : %.3f" % f1_score(y_test, y_pred))

confmat = confusion_matrix(y_true=y_test, y_pred=y_pred)

print(confmat)

5) 긍정/부정 키워드 분석

plt.rcParams['figure.figsize'] = [10, 8]

plt.bar(range(len(lr.coef_[0])), lr.coef_[0])

긍정/부정 키워드 출력

print(sorted(((value, index) for index, value in enumerate(lr.coef_[0])), reverse=True)[:5])

print(sorted(((value, index) for index, value in enumerate(lr.coef_[0])), reverse=True)[-5:])

coef_pos_index = sorted(((value, index) for index, value in enumerate(lr.coef_[0])), reverse=True)

coef_neg_index = sorted(((value, index) for index, value in enumerate(lr.coef_[0])), reverse=False)

coef_pos_index

invert_index_vectorizer = {v: k for k, v in vect.vocabulary_.items()}

for coef in coef_pos_index[:15]:

print(invert_index_vectorizer[coef[1]], coef[0])

for coef in coef_neg_index[:15]:

print(invert_index_vectorizer[coef[1]], coef[0])

패스트캠퍼스 데이터분석 강의 링크

bit.ly/3imy2uN